Enterprise “Lift and Shift” to the Public Cloud Requires a Newer Type of API and Cloud Security Program to Prevent Data Breaches

- By Doug Dooley

- Apr 26, 2021

One of our best customers is a Fortune 50 financial services company for homeowners across America. Their goal is and always has been to keep customer information private and secure. One of the principal lessons of the 2008 global financial crises is the importance of American homeownership stability and general accountability.

Keeping American homeowners’ data and privacy safe further promotes stability and accountability. In this world of ever-increasing data theft and security breaches, keeping up with the threat landscape has never been more challenging and critical. For every company responsible for protecting and migrating data to the cloud, it is enormously challenging to securely “lift and shift” business-critical Enterprise applications with millions of records from on-premise data centers to a public cloud environment. As this organization took on this massive effort to “lift and shift” to the cloud, they detailed out a plan, selected best-of-breed security solutions, and ended up with one of the most innovative projects ever undertaken in an enterprise company.

In addition, they took it upon themselves to embrace forward-thinking technology experts bringing to light the importance of API security. They identified early that APIs are the new vector of attack because of the massive amounts of data-in-motion. Further, they created a new blueprint for cloud native API security and digital transformation for any organization looking to do the same.

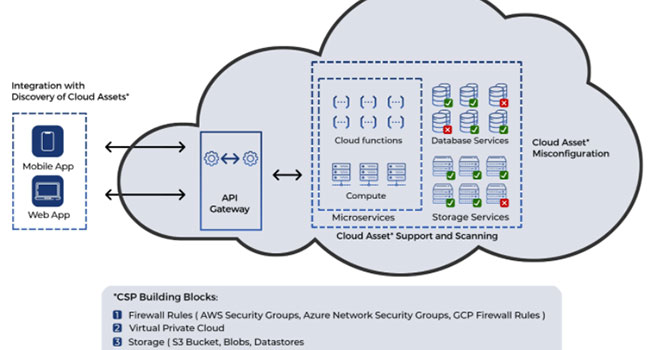

Recently the company launched an enormous internal project they called “The Cloud Native API Security Program,” that not only addressed and assessed their complete on-premise security posture, but expedited a move to API- and microservices-based delivery commonly found in public cloud environments. When entering the migration phase of their business critical applications, their security leadership team came to the conclusion that there was a need to focus more on understanding and protecting APIs that are inherently the digital nervous system of cloud-hosted applications.

Many of their existing tech vendors and security tools used inside their private data centers were not able to address security controls in a public cloud environment. Many of those tools had no one to discovery nor inventory the APIs and messaging queues that are pervasive in cloud applications. So the project involved deep analysis and time spent researching vendor solutions that could help with the entire digital migration, while keeping customer data secure. Given budgetary guidelines and a strict time window, their team deployed solutions that would integrate easily and scale, resulting in a better overall security posture.

The biggest challenge they faced was getting their cross-functional IT and security teams to agree on how to implement and then deploy a new critical infrastructure in a timely manner. They had a cross-organizational team effort which was quite large in scope – it consisted of architects, developers, operations, and IT security teams. Reaching consensus from all parties involved took a tremendous amount of time and effort. In the end, all involved agreed they needed a comprehensive focus on supporting the “lift and shift” effort of cloud migration for the best results and ROI without ever compromising the well-earned trust attached to their brand.

It is interesting to note that despite global security industry narratives as many as three years ago talking about API security, there was nothing available in the industry for their project at that time. API security is very different from web app security. Both web apps and APIs utilize a common protocol of HTTPS yet no traditional web app security tool can deal with the challenges of securing modern API such as REST and GraphQL.

As the company moved within the organization to an API microservices-based way of designing and building applications, they knew they had to be early in the process and get started creating and building out next-generation security practices to protect that type of data and delivery model. With an aggressive cloud migration underway, the security team had been tasked with the enormous undertaking of lifting vast amounts of application and data workloads from numerous on-premise locations, and carefully shifting everything to Amazon Web Services (AWS) in the public cloud.

The goal was to complete this massive effort in 18 months, resulting in significant cost savings estimated to exceed $100 million. With this rapid transfer of data and application workloads, the opportunity for exposure of sensitive information increased dramatically. With this new transfer of enterprise apps and data being more vulnerable and new security exposures being discovered, there was an immediate need to constantly monitor the growing attack surface, and to be sure they had the best security controls in place. Because more apps and sensitive data live in the cloud than on-premise, data is more vulnerable and easily exposed through publicly facing APIs.

Previously the company – and the industry at large – used pen testers (often called red teams) on a quarterly or annual basis to perform similar calculations. Pen testing has been a vital part of assessing security for some time. This is where someone actually breaks into an applications, networks, or underlying infrastructure services to determine where the holes are; and uncovers the critical security issues of a systems, how vulnerabilities are exploited, and offers steps to remediate them.

With more Agile development and automated CI/CD processes employed, manual pen testing has become more difficult to manage and often obsolete by the time the results are presented. With digital migration, pen testing conducted by people are not truly able to keep up with the daily and weekly changes in the technology stack. Organizations need to ensure 24/7 calculating of the attack surfaces during global digital migration of data from private to the multi-cloud environments and be able to respond and remediate any critical issue immediately, not quarterly nor annually.

The IT team at this company initiated a refactoring process which means they took existing on-premise applications and made necessary changes for them to run in the cloud. They had to switch the API gateways from one vendor to a completely different set of API vendors. Their DevOps team created an entirely new framework to “glue” it all together – after they had completely taken apart the existing framework.

Along the way there were timing delays and challenges to get the authentication and authorization setup to include proper MFA enforcement. The new gateways became a shared surface across multiple AWS accounts, but they developed a centralized point connecting everything without opening network connections.

Additional measures to securing APIs and API gateways were not as critical when applications and the infrastructure were on-premise because there was a perimeter firewall keeping those APIs private. In the cloud, all APIs private or public are exposed. There are many more vulnerabilities and opportunities for data to be stolen, and APIs must be accessible when built and hosted in the cloud.

Depending on how applications are written, there is also the potential for additional attack vectors such as messaging queues, which like APIs are accessible in cloud-native apps. The team deployed solutions from Data Theorem, Google Apigee, AWS, and others, and they have been able to accomplish their goals to keep customer data secure, while calculating, monitoring, managing and remediating any potential threats or attacks, to help assure a successful digital migration. These solutions work together to form what is called an “intercloud.”

Using AWS has allowed them to learn more about cloud templating, and this project overall has brought in new solutions and discoveries. It has also enabled their team to quickly implement and manage a completely new way of handling its most sensitive data.

Being one of the world’s largest financial mortgage companies, this organization takes great pride in securing customer sensitive data. This is and always has been their top priority from a security and privacy perspective.

One of the most interesting results from this project was that they gained visibility into areas of their data they didn’t even know were possible to detect. It is always said, “You cannot secure what you don’t know.”

Because they had deployed Data Theorem they were able to be compliant with security policies around cloud-native apps and APIs, therefore providing a much higher level of vulnerability management and oversight to protect sensitive data. This led to millions of dollars in ROI.

They have been able to reduce risk, and with Data Theorem conducting more than 85 million scans of their API cloud environment, there is a continuous monitoring of the public cloud environment, and thousands of scans per day to validate their applications and APIs are not exposed to exploits that can lead to a data breach. This has protected their reputation as a trusted brand that the APIs with personally identifiable information (PII) have never been compromised. They have been able to identify and resolve more than 5,000 policy violations before they were ever exploited by an outside attacker.

As a result of this critical project, this global company has been successfully migrating critical applications and the associated data to a cloud environment, all securely.

As their teams researched for vendor solutions that would work together for digital migration, this resulted in discoveries of new API-related solutions they did not realize existed. What they shared they feel is most innovative about their project is that they are an early adopter – as “lift and shift” to the cloud is just gaining momentum around the world, they are already securing APIs and the API gateway with capabilities recently introduced to the industry. So they feel they are innovating and being part of the industry’s cloud migration as it is developing, perhaps even creating an important security blueprint for the industry.

Doug Dooley is the COO of Data Theorem.