Yesterday’s Hype

How analytics has become today’s reality

- By Robert Muehlbauer

- Dec 01, 2021

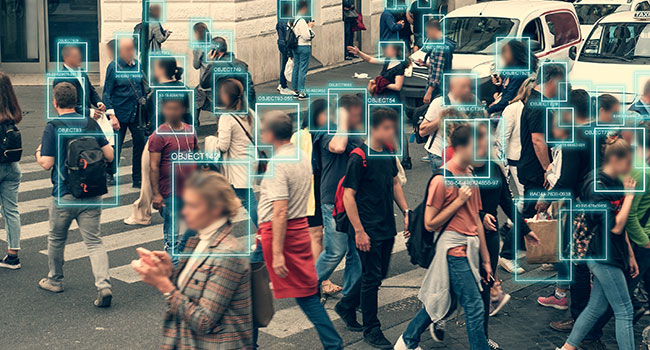

Let’s admit it. When analytics first hit the market, they got off to a rocky start. Lots of hype, and high expectations. When it came to delivering on those promises, their performance often fell short of the mark. Despite this somewhat checkered past, enthusiasm for analytics’ potential has not waned. Over the last five years, it has begun to pay off. The analytics of today are very different from their early predecessors. So what has changed?

A Much-improved Video Ecosystem

It all comes down to better hardware and more advanced software tools. The trajectory of improvements in the entire technology ecosystem – lenses, sensors, chipsets and development platforms, as well as machine and deep learning modules – are making it possible for today’s analytics to be far more accurate and reliable than their predecessors.

Lens technology. It starts with matching the right lens to the surveillance task to ensure the capture of quality images. Advances in optics have led to more choices: lenses with varying fields of view, different levels of zoom, even levels of sensitivity to light. They are also yielding better resolution, contrast, clarity, and depth of field.

Sensor technology. Sensor technology has also improved. Today’s sensors deliver greater dynamic range, capturing usable image details even in bright light and shadows. They also outperform their predecessors in eliminating digital noise and capturing color in lowlight conditions.

Chipsets. Today’s chipsets are specifically optimized for surveillance applications. They provide far more horsepower than previous iterations. They can run complex algorithms quicker, with a higher rate of accuracy. They can take the raw data from the sensor, improve the image with more saturated and realistic color, provide clearer images of moving objects, and enhance detail in backlit scenes and scenes with big differences between light and darkest areas. In addition, they support enhanced compression technologies – H.264 and H.265 – allows for transmitting higher resolution images at lower bandwidths.

Open development platform and artificial intelligence. On the software side, today’s development platform provides more flexibility for modeling algorithms, testing and revising them to improve their accuracy. Drawing on artificial intelligence – both machine and deep learning – developers have been able to create algorithms capable of figuring out relations between data, recognizing images and patterns, and deducing high-level meaning.

What’s more, it is important to note that open development platforms−by their very nature−foster innovation. This is because open-source communities encourage contribution and collaboration, thereby benefiting from many contributors. As a result, open-source projects capitalize on diverse-thinking, which can accelerate the development process versus projects conducted in isolation or within the confines of a proprietary system.

Integration with Other Security Technologies

Because they are yielding dependable results, analytics have begun playing a larger role in the marriage between surveillance cameras and other security technologies.

Access control. Analytics are being incorporate into multi-factor authentication systems. Facial recognition analytics can rapidly compare a visual database of card credentials against live video to determine the authenticity of the user.

Network audio. Analytics are becoming part of a proactive verbal deterrent system. They are able to recognize movement, discern between a person and an animal, and trigger a network audio recording to warn away an intruder.

Radar. Analytics serve as the link between radar and the video camera. When radar detects motion, analytics can trigger a network camera to auto-track movement based on coordinates from the radar.

Environmental sensors. Integrating analytics with environmental sensors will determine the threat level of changing conditions – everything from snow and tornedos to flood conditions, anomalies in traffic patterns, even air quality.

Audio analytics. Now, in addition to video analytics, emerging audio analytics are taking sound detection to new levels. Instead of simply measuring decibel levels, they are able to distinguish and alert on specific wave patterns such a weapon firing, glass breaking, a car alarm or aggressive voices.

Setting Realistic Expectations

Even though analytics have come a long way, given the industry’s history, it is important to set realistic expectations about what specific analytics can and cannot do. Integrators and installers need to do their due diligence.

Review the reference materials from manufacturers and developers – manuals, installation guides, etc. – to help you understand best use cases. Talk to their technical staff for additional insights and recommendations, and draw on their support expertise during installation to help you optimize performance and ensure success.

Following Best Practices

Many factors can influence the success or failure of analytics in an installation. Following best practices, however, helps avoid common pitfalls. It usually takes a bit of research to find out whether what you are trying to achieve is realistic. It helps to start with defining the operational requirements or purpose of the surveillance. After doing a site survey to scope out what is possible, what else might you consider when implementing video analytics? Here are a few examples:

People counter. A people counter analytic counts people passing through an entrance and in what direction they pass. The analytic can also estimate how many people are currently occupying an area and their average visiting time. In addition to following the camera’s installation, there are some recommended steps to take to ensure that the analytics behaves in expected ways.

o Install the camera at a minimum height per manufacturer’s recommendation.

o Mount it straight above the point where people pass.

o Avoid strong sunlight and sharp shadows in the camera view.

o Do not place cameras where moving objects such doors or escalators can interfere with the counting area.

License plate recognition. License plate recognition identifies the pixel patterns that make up a license plate and translates the letter, numbers and graphics on the plate and compares them in real-time to a database of plates. In selecting a good match for the location, it helps to know the detection range and detection time of the camera. Some LPR cameras work well in traffic up to 45 mph. Others can handle vehicle speeds up to 90 mph, or faster.

Recommended camera adjustments. Check the autofocus and manually fine-tune if needed. Turn off the wide dynamic range. Set the local contrast to a level that reduces noise at nighttime but keeps license plates visible. Set the shutter speed to maximum. Adjust the gain to optimize the blur and noise trade-off. Set the iris to automatic mode.

Recommended mounting. Avoid mounting the camera facing direct sunlight (sunrise and sunset) to prevent image distortion. Set the mounting angle at no more than 30o in any direction. The mounting height should be about half the distance of the distance between a vehicle and the camera.

Facial recognition. This is a category of biometric security. It is a way of identifying or confirming a person’s identity using their face from photos, videos or in real-time. Different operational goals effect the choice of lens, camera and field of view. Do you want to detect whether a person is present? Do you recognize that the person is the same as someone seen before?

Do you want to be able to identify the person beyond a reasonable doubt? Because real-life scenes tend to be complex, it is difficult, if not impossible to apply a single mathematical equation to determine the exact pixel density or resolution needed to meet these different operational objectives. The chart below, however, offers a general guideline.

Once you understand the operational requirements, you can use the number to identify the minimum resolution required and calculate the maximum width of the scene where the identification can occur. Bear in mind that the lower the resolution, the narrower the maximum scene width. The operational requirements should also specify the capture point, the imaginary line across the camera’s field of view. Understand that the further back the capture line – placing the person further back in the image – the wider the capture line and the larger the identification area. However, the further away from the camera the person gets, the more the pixel density decreases.

Before the application goes live, it is best to use a pixel counter tool to validate whether the configuration choices you have made will meet the operation expectations of the customer.

What the Future Holds for Analytics

Technology is evolving so quickly that if you can think it, eventually analytics will be able to do it. We are already seeing analytics detecting and evaluating ever more subtle nuances in behavior and environment, whether it is in the color and shape of people, vehicles, or objects, the way they move in a scene, or even how much heat they generate. We are seeing analytics applied to verifying compliance to health and safety standards, like wearing a hard hat in a construction site or a mask indoors during the COVID pandemic.

There are more analytics harnessing the power of machine and deep learning to predict performance and automatically trigger a range of responses to specific anomalies, such a redirecting traffic and changing the timing of traffic lights in real time to alleviate congestion.

Because of their ability for rapid discernment, we will likely see an increasing uptick in demand for use-specific applications. It will be up to trusted partners, security integrators, to stay abreast of what is feasible – learn to separate hype from reality. In that way, they can help their customers implement solutions that will actually meet their expectations.

This article originally appeared in the November / December 2021 issue of Security Today.