SPONSORED

AI Beyond the Hype

Today, there are in-depth discussions about what ‘might be’ possible with AI, machine and deep learning-based analytics products on the market. Much of the conversation centers on practical ways to utilize deep learning and neural networks and how these techniques can improve analytics and significantly reduce false-positives for important events. When talking to end users about analytics, it becomes clear that many still don’t take full advantage of analytics. In some cases, this can be due to a history of false-positives with previous generation technology, but in others, it can simply be a case of not believing that reliable analytics are achievable for their unique needs. The good news is that with AI, or more accurately, deep learning and neural networks, we are making great strides towards increasing accuracy.

In the past, developers tried to define what an object is, what motion is and what should be considered an interesting motion that we want to track versus false positive “noise” that should be ignored. Some classic examples are wind blowing leaves on a tree, rain, snow or a plastic bag floating by. For far too long, something as simple as motion detection has been plagued by false-positives generated by wind. Users could try and reduce the sensitivity, and eliminate the false motion in a light breeze and still see false positives with motion events triggered in a wind storm. Using neural networks and deep learning to define objects such as humans, cars, buses and animals means that traditional analytics can now focus on objects. These new algorithms have been taught to recognize a human being by seeing thousands of images of humans. Once an algorithm has learned these examples, it can apply this advanced knowledge to existing analytics. For example, if a car crossed a line at an entry gate it might be acceptable. However, if a person passes that same line, we might want an alert. A shadow should be ignored and swaying tree branches shouldn’t be considered. A car driving in the wrong direction warrants an alert, however people moving about freely is fine. All of the traditional analytics regarding motion such as appear / disappear, line crossing, object left behind and loitering will be more accurate and capable of additional refinement using AI and the power of object recognition.

Practical Applications and Limits for Security and Surveillance

Machine and deep learning-based analytics are actively being marketed and productized by many vendors with Hanwha Techwin poised to deliver its first AI-based cameras this year. Existing data models can be purchased from third party companies, but the question remains: How accurate are they? Additionally, many of these new AI-based analytics are offered as ‘post processes’ run on a server or workstation, when a majority of customer use cases demand real-time feedback.

It takes a significant amount of computational power for a machine to “self-learn” in the field, so “controlled learning” is best left to powerful server farms in R&D laboratories and universities for now. Real-time analytics also require that video streams be uncompressed to be analyzed. While this might make sense for installations with a few cameras connected to a server, it’s clearly an unacceptable resource drain to decode (open) each compressed camera stream when hundreds of cameras are involved. Once an algorithm and data set are created, it can be ‘packed up’ and embedded on the edge to perform real-time detection and alerts for the specific conditions it has been trained to recognize. It will not self-learn, but it can provide a useful function for recognizing known objects or behaviors with minimum errors.

Having deep learning analytics on the edge —analyzing the image before it is compressed and sent to storage— is clearly what everyone has in mind when they imagine the usefulness of such technology to alert staff and make decisions in real time.

Value Proposition of Deep Learning-based Analytics

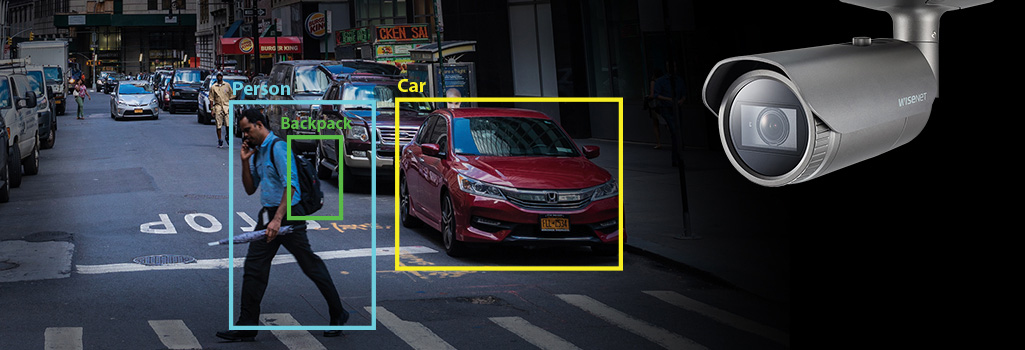

The more we automate video processing and decision making, the more we can save operators from redundant and mundane tasks. Computers capable of sophisticated analysis, self-learning and basic decision making are much better and faster at analyzing large volumes of data while humans are best utilized where advanced intelligence, situational analysis and dynamic interactions are required. Increased value comes from the efficiencies gained when each resource is used most effectively. The goal is to help operators make better informed judgements by providing detailed input and descriptive metadata. A primary focus for AI in cameras today is to reduce, if not eliminate, false positives in existing analytics. Powerful object recognition allows us to completely remove common noise events caused by wind, rain and snow. AI-based cameras can precisely recognize objects such as vehicles and people, as well as capture identifying characteristics such as colors (up to two per object), carried items such as bags, backpacks, hats and glasses and even approximate gender and age. With object recognition, we can make existing analytics much more powerful—send an alert when a car crosses a line, while allowing humans to cross with no alert. By capturing additional descriptive metadata, AI-enabled cameras will make forensic search within supported VMS more powerful than ever before.

We see airport security, subways and other mass transit customers as early adopters of this technology. Detecting objects left behind is crucial in the world we live in today. The benefits to retail organizations wanting to optimize their business operations is equally important. It may even be possible to rank reaction to products based on learned postures and skeletal movements as the technology continues to evolve. AI-based cameras enable systems integrators to sell value far beyond a security system. Integrations with other systems, even customer relationship management (CRM) systems will be not only possible, but desirable as a way to enhance metrics for retail establishments.

What to Expect in the Near Term

Beyond enhanced accuracy of existing analytics, solutions for the near future might feature hybrid approaches where on-the-edge hardware combines with server software to deliver a powerful combination of analysis and early warning and detection capabilities. Comprehensive facial recognition and comparisons with large databases will not be practical on the edge anytime soon, so that is a good example of where servers can do some heavy lifting. Object recognition and classification is perfectly suited for in-camera analytics—the camera knows it is a face and can pass that metadata directly to a server which can further interpret the unique characteristics and cross reference with database entries. We can easily imagine that AI-based analytics might take the same progression as traditional analytics. The first implementations were server-based, then there was a migration to a hybrid approach where some algorithms were in the camera, but a server was still required for in-depth analysis, and ultimately the entire analytic process could be hosted on the edge.

The Future Looks Bright for Image Classification

Being able to identify what’s going on in a still or moving image is one of the most interesting areas of development in machine and deep learning. With moving images, analysis goes beyond simply classifying objects, to classifying the behaviors and moods of those in the frame. Already, vendors are talking about ‘aggression detection’ as a desirable feature, but it’s easy to imagine that the cost to an organization of a false positive or false negative in such a scenario could be very high. For that reason, Hanwha is taking a conservative approach as it develops its own algorithms in-house with teams of experts.

Whether it’s big players like Facebook, Google, or Nvidia, significant investments are being made in AI and machine learning to classify images and objects as well as text and speech. Some of this technology development will trickle down into the security industry, and much of it will have to be custom developed to suit the needs of surveillance workflows. It may be ok to mis-label a person’s face in Facebook, but security organizations should not be willing to make such a mistake. This is one of many reasons why our industry must insist on higher standards.

Customer expectations for AI and all its variants are understandably high. However, careful consideration is needed when choosing any AI type solution.

Conclusion:

At Hanwha Techwin, our R&D department continues to actively develop deep learning algorithms that provide extremely high degrees of accuracy that the business community can rely upon. We are training edge devices to correctly recognize actions and motion of interest separately from normal environmental variables such as wind, snow and rain. Our continued focus is to streamline operations and deliver truly actionable intelligence. We are currently developing our Wisenet 7 chip which will focus on providing deep learning analytics on the edge. Hanwha invests a significant amount of resources to develop its own SoC (System on Chip). This allows us to make edge devices smarter, while focusing on an analytic engine geared toward video surveillance specific intelligence. As we roll out our first cameras this year, our development so far has shown that the benefits to surveillance analytics, as this field continues to evolve, will be substantial.